DynamoDB Guide for the AWS SAA Exam

A guide into Amazon's NoSQL database, DynamoDB. This article is specially crafted for those with the AWS SAA certification in mind.

DynamoDB is a fully managed NoSQL database service by AWS designed for applications that need low-latency data access at any scale. It's crucial to understand how it compares to other AWS database services like RDS, Elasticache, and Redshift, and its relevance in serverless architectures.

DynamoDB Basics

- Data Model: DynamoDB uses a key-value and document store model.

- Scalability: Automatically scales according to demand.

- Consistency: Offers both eventual consistency (default) and strong consistency.

- Throughput: You can specify provisioned throughput (Read Capacity Units, Write Capacity Units) or use on-demand mode.

- Storage: SSD-based storage for low-latency, high-throughput workloads.

- Features:

- DAX (DynamoDB Accelerator): In-memory caching for read-intensive workloads.

- Global Tables: Multi-region replication for globally distributed applications.

- Streams: Captures real-time changes in item-level data.

- Integration: Ideal for event-driven architectures using AWS Lambda.

DynamoDB vs. RDS

- Data Model:

- DynamoDB: NoSQL, key-value store, schema-less.

- RDS: Relational database, supports SQL, schema-based (MySQL, PostgreSQL, SQL Server, Oracle, etc.).

- Scaling:

- DynamoDB: Scales horizontally, no management of infrastructure required.

- RDS: Vertical scaling (more compute/storage for performance), supports read replicas for scaling reads.

- Use Cases:

- DynamoDB: Applications with flexible schema, high write throughput, IoT, mobile apps, real-time data processing.

- RDS: Traditional business applications requiring relational databases, OLTP, complex queries, joins.

- Performance:

- DynamoDB: Fast at scaling to very high throughput, predictable performance at scale.

- RDS: High performance for relational workloads, with complex transactions and queries.

- Serverless:

- DynamoDB: Serverless by design, no server management.

- RDS: Not serverless, though you can use Aurora Serverless as an alternative for relational databases.

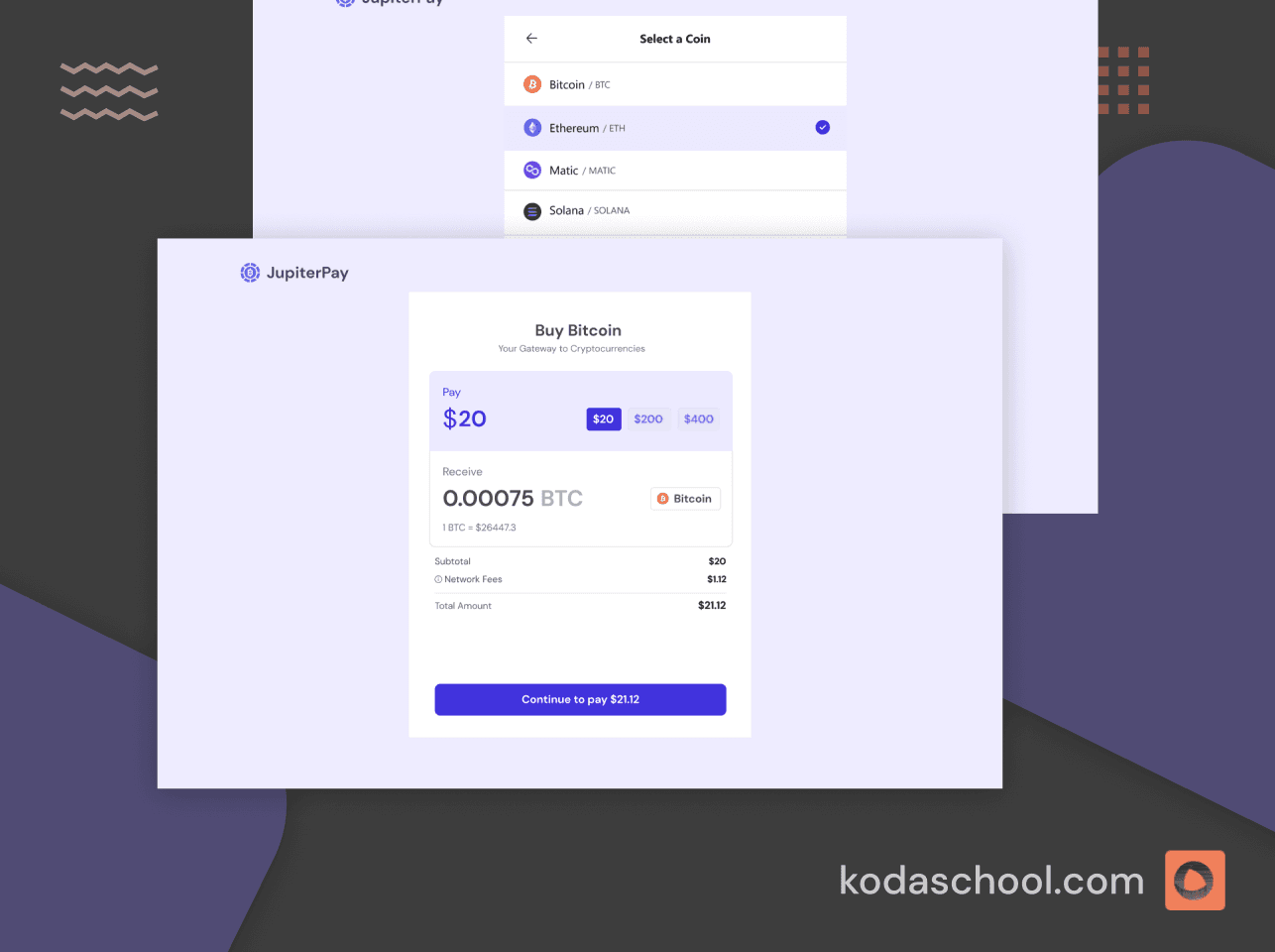

Try Kodaschool for free

Click below to sign up and get access to free web, android and iOs challenges.

DynamoDB vs. ElastiCache

- Purpose:

- DynamoDB: Primary NoSQL database.

- ElastiCache: In-memory caching service for databases, improves the speed of data retrieval.

- Data Model:

- DynamoDB: Persistent data store.

- ElastiCache: Ephemeral in-memory store (Redis/Memcached), often used as a cache for RDS or DynamoDB.

- Performance:

- DynamoDB: High throughput for transactional data storage and retrieval.

- ElastiCache: Extremely low-latency for frequently accessed data.

- Use Cases:

- DynamoDB: Store and retrieve structured/unstructured data at scale.

- ElastiCache: Cache hot data to reduce latency for read-heavy workloads in RDS or DynamoDB.

DynamoDB vs. Redshift

- Purpose:

- DynamoDB: NoSQL, optimized for key-value and document storage.

- Redshift: Data warehouse for OLAP (Online Analytical Processing).

- Data Model:

- DynamoDB: Schema-less, real-time read/write operations.

- Redshift: Relational data warehouse for complex queries and aggregations over large datasets.

- Scaling:

- DynamoDB: Horizontal scaling, high availability, fault-tolerant.

- Redshift: Scales up with compute nodes and storage, optimized for complex analytical queries.

- Use Cases:

- DynamoDB: Real-time web/mobile apps, IoT, user profiles.

- Redshift: Analytical queries, business intelligence, big data analysis.

Importance of DynamoDB for Serverless Architectures

DynamoDB plays a key role in serverless applications, making it essential for AWS Certified Solutions Architect Associate (SAA) exam preparation. Here’s why:

- Scalability: Since serverless applications experience unpredictable traffic, DynamoDB’s auto-scaling feature ensures that the application scales automatically without manual intervention.

- Pay-Per-Use: DynamoDB's on-demand pricing model fits perfectly into serverless cost models, allowing you to pay only for the operations performed.

- Integration with AWS Lambda: DynamoDB Streams enable real-time event-driven architectures when combined with AWS Lambda. Lambda functions can be triggered based on data changes in DynamoDB, ensuring a reactive and scalable system.

- No Infrastructure Management: With DynamoDB being a fully managed service, serverless applications can focus purely on business logic without worrying about underlying infrastructure or scaling.

- Global Replication: DynamoDB Global Tables offer a seamless way to build globally distributed serverless applications, ensuring low-latency access to data from anywhere in the world.

Common DynamoDB Scenarios in SAA Exam

- Choosing Between NoSQL and Relational Databases:

- Scenarios requiring high scalability, flexible schema, or high write throughput would typically favor DynamoDB over RDS.

- In-Memory Caching with DAX:

- If the workload is read-heavy, DynamoDB DAX provides a caching layer to reduce the latency of read operations significantly.

- Real-Time Analytics with DynamoDB Streams:

- Exam questions may present scenarios where you need real-time updates in other services when data in DynamoDB changes, which can be implemented using DynamoDB Streams and Lambda.

- Global Tables for Disaster Recovery:

- Global Tables can be set up across multiple regions, ensuring high availability and disaster recovery, a likely scenario for the exam.

- Monitoring and Optimizing Costs:

- Understanding when to use provisioned capacity vs. on-demand capacity to optimize costs will likely be a key aspect of DynamoDB-related exam questions.

Sample Quiz

Question 1

A gaming company is building a leaderboard system for a mobile game. The application needs to handle high write volumes and quickly retrieve the top players based on scores. The developers want a solution that provides high availability across multiple AWS regions to ensure the same experience for players globally. Which architecture would best meet these requirements?

A) Use Amazon RDS with Multi-AZ deployment and a read replica in each region.

B) Use Amazon DynamoDB with Global Tables and DynamoDB Streams to sync scores across regions.

C) Use Amazon DynamoDB with DynamoDB Accelerator (DAX) and provision read replicas manually.

D) Use Amazon Redshift to handle the aggregation of scores and replicate data between regions.

Answer: B

DynamoDB Global Tables allow you to replicate data across multiple regions automatically, ensuring low-latency data access for users globally. This setup ensures high availability and fast reads, which is ideal for a leaderboard application. DynamoDB Streams can be used for real-time updates, and DAX can improve read performance, but manually provisioning read replicas (C) isn’t optimal. RDS Multi-AZ provides failover within a region, and Redshift is suited for OLAP workloads rather than real-time, transactional data like leaderboards.

Question 2

You are developing a serverless web application using AWS Lambda, API Gateway, and Amazon DynamoDB. The application will allow users to create, update, and delete items in a DynamoDB table. You need to ensure that updates to the table are processed in real-time, triggering downstream actions. Additionally, the solution must handle millions of write operations per second and provide low-latency reads. Which combination of services would best fulfill these requirements?

A) Use DynamoDB Streams with AWS Lambda for real-time processing and enable DynamoDB Accelerator (DAX) for low-latency reads.

B) Use Amazon SQS with AWS Lambda to process updates and cache read results using Amazon ElastiCache.

C) Use Amazon RDS with AWS Lambda Triggers and configure Multi-AZ for failover.

D) Use Amazon Redshift with AWS Lambda and manually scale read replicas for real-time updates.

Answer: A

DynamoDB Streams can capture changes to the table and trigger Lambda functions to process updates in real time, which aligns with the requirement for downstream actions. DAX provides an in-memory cache to optimize read performance, reducing latency for users. SQS is typically used for decoupling services but wouldn’t provide real-time updates as efficiently. RDS and Redshift are more suitable for relational and analytical workloads, respectively, and wouldn’t handle millions of transactions as efficiently as DynamoDB in this scenario.

Question 3

A media streaming service is experiencing unpredictable traffic spikes based on user demand. They are currently using DynamoDB with provisioned capacity, but frequently run into throughput limits during traffic surges. The service team wants to ensure the database can handle traffic spikes automatically, while also minimizing cost when usage is low. What is the best way to achieve this?

A) Switch to DynamoDB On-Demand capacity mode to automatically adjust to workload demands.

B) Increase the provisioned capacity and enable Auto Scaling to handle traffic surges.

C) Use DynamoDB Streams with AWS Lambda to offload processing during traffic spikes.

D) Implement Amazon ElastiCache for Redis as an additional caching layer in front of DynamoDB.

Answer: A

DynamoDB On-Demand mode is specifically designed for applications with unpredictable traffic patterns, automatically adjusting to handle requests without requiring manual capacity provisioning. This ensures that your application can handle traffic spikes while minimizing costs during periods of low activity. Auto Scaling (B) helps with more predictable traffic, but On-Demand is better for highly variable workloads. ElastiCache (D) can reduce the load on the database but doesn't address the underlying capacity limits directly.

Question 4

A fintech company needs to store transactional data from millions of daily users, ensuring that the data is globally available, highly consistent, and replicated across multiple AWS regions. They require minimal latency for both read and write operations, and any data loss due to network failure must be prevented. Which approach should they take to ensure high availability, strong consistency, and fault tolerance?

A) Use DynamoDB with Global Tables and specify strong consistency for reads across regions.

B) Use Amazon RDS with Multi-AZ deployment and cross-region replication for disaster recovery.

C) Use Amazon ElastiCache with Redis for global replication and strong consistency.

D) Use Amazon DynamoDB in one region and replicate the data manually across regions using Lambda functions.

Answer: A

DynamoDB Global Tables allow for automatic multi-region replication, ensuring high availability and low-latency access to data globally. By using strong consistency for reads, the company can ensure that data is consistent across regions. RDS Multi-AZ (B) is suitable for failover within a single region but doesn’t automatically handle cross-region replication as efficiently as DynamoDB. ElastiCache (C) is for in-memory caching, not suitable for long-term, highly consistent, persistent storage. Manual replication using Lambda (D) increases operational complexity.

Question 5

You are managing a SaaS application that uses DynamoDB to store user activity logs. The compliance team has asked you to ensure that data can be restored in case of accidental deletion or corruption. The solution should allow you to restore the database to any point in time over the past 30 days. What should you do to meet this requirement?

A) Enable DynamoDB Streams and configure AWS Lambda to create backup snapshots every 24 hours.

B) Enable DynamoDB Continuous Backups and Point-in-Time Recovery (PITR).

C) Use AWS Backup to create daily backups and manually restore data if needed.

D) Use Amazon S3 to store daily exports of your DynamoDB table and implement a custom restore solution.

Answer: B

DynamoDB Continuous Backups with Point-in-Time Recovery (PITR) allows you to restore your database to any point in time within the last 35 days, which directly satisfies the requirement. AWS Backup (C) provides similar functionality but isn't as seamless or real-time as PITR for DynamoDB. While exporting data to S3 (D) and creating custom snapshots (A) might work, they would require significant operational overhead compared to using the built-in PITR feature.