Learning S3 Lifecycle Policies for the AWS SAA exam

Learn how AWS S3 lifecycle policies simplify storage management, reduce costs, and ensure data compliance. Discover storage class transitions, use cases, and tips for AWS SAA exam preparation!

Introduction

Amazon Simple Storage Service (S3) is a foundational offering in cloud computing, designed to store and retrieve any amount of data from anywhere on the web. Known for its scalability, durability, and reliability, AWS S3 powers countless applications—from website hosting and big data analytics to backup and disaster recovery. Its pay-as-you-go model ensures flexibility, making it a preferred choice for businesses of all sizes.

However, as data accumulates over time, managing storage costs and ensuring efficient utilization can become challenging. Factors such as storing infrequently accessed data in high-cost tiers or retaining outdated files can inflate expenses unnecessarily. Additionally, manually managing data transitions across storage classes can be tedious and error-prone.

This is where AWS S3 lifecycle policies come into play. By automating data transitions between storage classes and setting expiration timelines, lifecycle policies empower users to streamline storage management. These policies not only help reduce costs but also ensure compliance with data retention requirements, all while minimizing manual intervention.

What Are S3 Lifecycle Policies?

AWS S3 lifecycle policies are a set of rules that automate the management of object storage over time. Designed to optimize storage costs and simplify data retention processes, these policies enable users to control how objects are stored, transitioned, or deleted based on their age or other defined criteria.

With lifecycle policies, you can define rules that:

- Transition objects between S3 storage classes (e.g., from S3 Standard to S3 Glacier) based on their usage patterns.

- Delete objects that are no longer needed after a specified duration.

- Clean up incomplete multipart uploads to avoid unnecessary costs.

Benefits of S3 Lifecycle Policies:

- Cost Optimization: By automatically moving infrequently accessed or archival data to cost-effective storage classes, lifecycle policies minimize unnecessary expenses.

- Data Retention Compliance: Policies can be configured to retain data for a specific duration to meet regulatory or business requirements, ensuring seamless adherence to governance policies.

- Simplified Data Lifecycle Management: Lifecycle rules eliminate the need for manual intervention, allowing users to focus on strategic tasks rather than operational overhead.

In summary, S3 lifecycle policies are a robust and flexible tool for managing the full lifecycle of your data, from frequent access in high-performance tiers to long-term storage in low-cost archival classes.

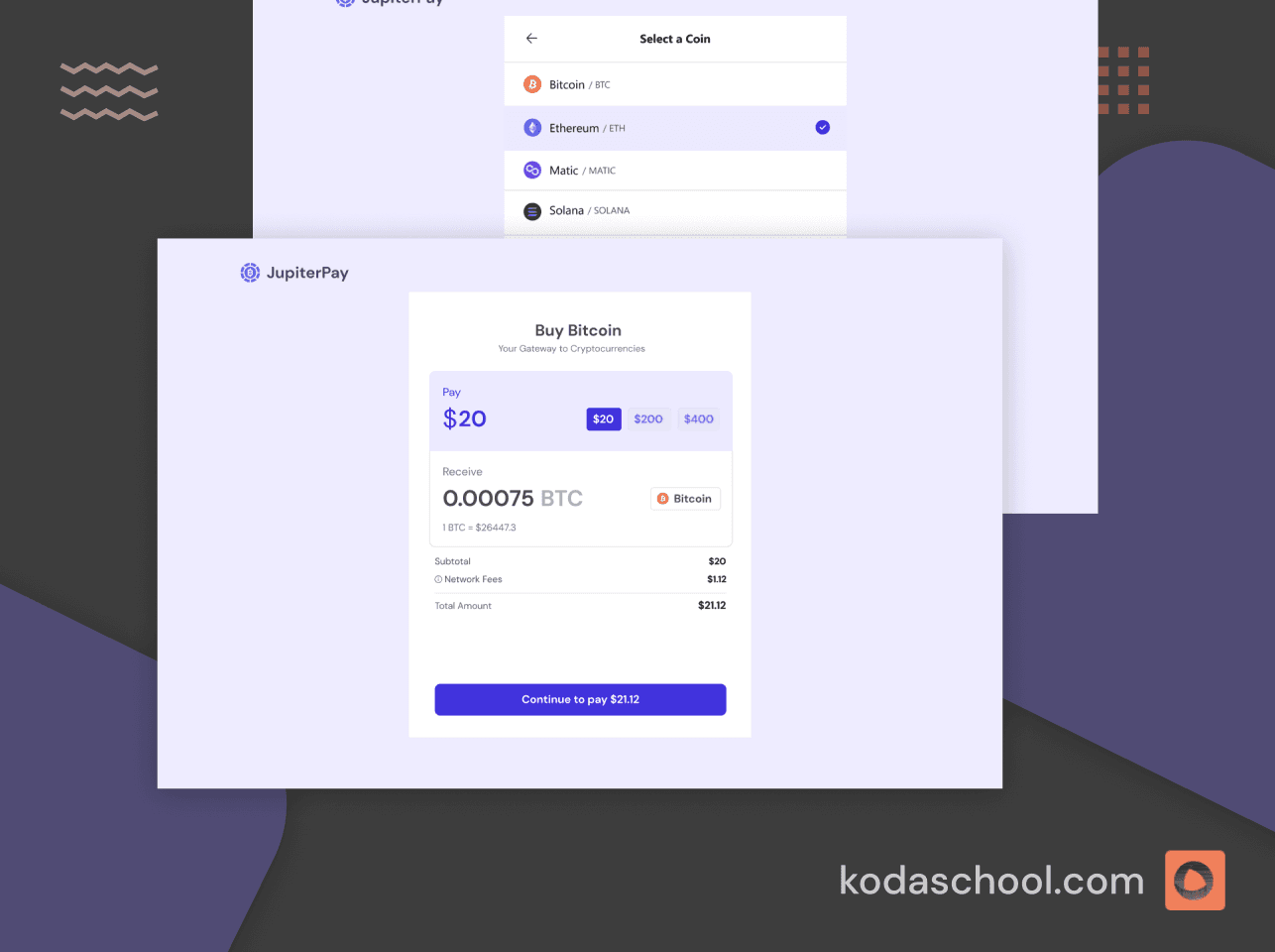

Try Kodaschool for free

Click below to sign up and get access to free web, android and iOs challenges.

Lifecycle Rules and Storage Classes

AWS S3 offers a variety of storage classes, each tailored to different access patterns, durability requirements, and cost considerations. Lifecycle rules allow you to transition objects between these storage classes automatically, optimizing costs based on how frequently data is accessed.

AWS S3 Storage Classes

- S3 Standard

- Designed for frequently accessed data.

- High durability, low latency, and high throughput.

- Ideal for active datasets such as web applications, content distribution, or data processing.

- S3 Intelligent-Tiering

- Automatically optimizes costs by moving objects between access tiers based on access patterns.

- No operational overhead, charges a monitoring fee per object.

- Suitable for datasets with unpredictable or changing access patterns.

- S3 Standard-IA (Infrequent Access)

- For data that is accessed less frequently but requires rapid access when needed.

- Lower storage cost than S3 Standard with slightly higher retrieval costs.

- Backups, disaster recovery, or long-term data storage with occasional access.

- S3 One Zone-IA

- Similar to Standard-IA but stored in a single availability zone.

- Lower cost than Standard-IA but less resilient to data loss.

- Non-critical or reproducible data that can tolerate availability zone loss.

- S3 Glacier Instant Retrieval

- Archive storage with millisecond retrieval.

- Low-cost storage for rarely accessed data requiring instant access.

- Medical records or regulatory documents requiring quick retrieval.

- S3 Glacier Flexible Retrieval

- Low-cost archive storage with flexible retrieval options (minutes to hours).

- Lower storage costs with options for expedited, standard, or bulk retrievals.

- Archives or data backups with infrequent access needs.

- S3 Glacier Deep Archive

- The lowest-cost storage option for long-term archiving.

- Retrieval times range from 12–48 hours.

- Data rarely accessed, such as compliance archives or historical records.

Relationship Between Lifecycle Rules and Storage Classes

Lifecycle rules act as a bridge between S3 storage classes, enabling automated transitions based on data age or custom criteria like object tags. For example:

- Data can start in S3 Standard for frequent access.

- Transition to S3 Standard-IA after 30 days of no access to save costs.

- Move to S3 Glacier Deep Archive after a year for long-term, low-cost storage.

- Expire or delete data after its retention period ends.

These rules ensure your data is stored in the most cost-efficient class throughout its lifecycle, with minimal manual intervention.

Example Use Case: Transitioning Infrequently Accessed Data to Glacier Classes

Consider a scenario where you have log files generated daily, stored in an S3 bucket:

- Store the logs in S3 Standard for the first 30 days to allow frequent access for analysis.

- After 30 days, transition the logs to S3 Standard-IA to reduce costs while maintaining quick access.

- Move the logs to S3 Glacier Deep Archive after 180 days for long-term, low-cost storage.

- Expire the logs entirely after 365 days to comply with data retention policies.

By defining these lifecycle rules, you can optimize storage costs while ensuring the availability of critical data at each stage of its lifecycle.

How Lifecycle Policies Work

Lifecycle policies in AWS S3 are built using rules that define how and when objects are transitioned between storage classes or deleted. These rules ensure data is stored efficiently, aligning with your cost and accessibility requirements.

Rules Structure

- Prefix and Tags

- Prefix - Filters objects based on their key name. For instance, a prefix of

logs/applies the rule to all objects under thelogsdirectory. - Tags - Applies rules to objects with specific metadata tags. For example, you can tag objects with

Environment: Archiveto apply archival rules.

- Prefix - Filters objects based on their key name. For instance, a prefix of

- Transition and Expiration Settings

- Transition Settings - Define when objects are moved to a different storage class. For example, transition objects to S3 Glacier after 90 days.

- Expiration Settings - Specify when objects should be permanently deleted. For instance, delete objects 365 days after creation.

Steps AWS Follows When Evaluating Lifecycle Rules

- AWS evaluates lifecycle rules daily.

- Each rule is checked against object metadata, including creation date, tags, and prefix.

- If an object meets the criteria, AWS applies the defined transition or expiration action.

Example: Transitioning Data After 30 Days of Creation

- Scenario: You store transactional logs in S3 and want to reduce costs as they age.

- Rule:

- Transition logs from S3 Standard to S3 Standard-IA after 30 days.

- Transition logs to S3 Glacier Flexible Retrieval after 180 days.

- Delete logs after 365 days.

- Result: Your logs are stored cost-effectively throughout their lifecycle without manual intervention.

Use Cases

1. Archiving Log Files

- Scenario - Daily server logs are generated and accessed for the first month for analysis.

- Solution:

- Store logs in S3 Standard initially.

- Transition to S3 Glacier Flexible Retrieval after 30 days for archival storage.

- Expire logs after one year.

- Outcome - Significant cost savings while maintaining compliance with log retention policies.

2. Managing Backups

- Scenario - Application backups need long-term storage for disaster recovery.

- Solution:

- Store backups in S3 Standard for the first 7 days.

- Transition to S3 Glacier Deep Archive for 7+ years of low-cost storage.

- Outcome - Reliable, low-cost backup storage with compliance to data retention requirements.

3. Cost Optimization

- Scenario - Your data access patterns are unpredictable.

- Solution: Use S3 Intelligent-Tiering, which automatically moves objects between access tiers based on usage.

- Outcome - You achieve optimal storage costs without having to predict or manage access patterns.

Pricing Considerations

Lifecycle policies can significantly impact costs, as different S3 storage classes have varying pricing models for storage, access, and data retrieval.

Cost Implications of Storage Class Transitions

- Storage Cost - Lower storage cost as data transitions from S3 Standard to archival classes like Glacier Deep Archive.

- Retrieval Cost - Higher costs for accessing data in archival classes; consider access patterns carefully.

- Monitoring Fee - Intelligent-Tiering incurs a small monitoring fee per object.

Comparison of Storage Classes and Associated Costs

- S3 Standard: Highest storage cost but includes low access costs.

- S3 Glacier Instant Retrieval: Lower storage cost with minimal retrieval latency.

- S3 Glacier Deep Archive: Lowest storage cost, designed for infrequent access with long retrieval times.

How Lifecycle Policies Reduce Costs

- Automate transitions to lower-cost storage classes for infrequently accessed data.

- Enable expiration of unnecessary or outdated objects to save on storage costs.

- Reduce the need for manual oversight, ensuring efficient storage management.

By implementing well-planned lifecycle policies, you can achieve significant savings while maintaining data availability and compliance.

Conclusion

Understanding lifecycle policies is also essential for anyone preparing for the AWS Solutions Architect Associate (SAA-C03) exam. This topic frequently appears in exam scenarios, testing your ability to design cost-efficient and scalable storage solutions. By mastering lifecycle policies, you’ll not only optimize your AWS usage but also strengthen your skills for passing the exam and advancing your cloud career. Dive into lifecycle rules and start applying them to real-world scenarios to gain hands-on expertise and ace your AWS certification!

Sample Questions

Question 1

You have a bucket storing images for a marketing campaign. These images are accessed frequently in the first 30 days but rarely after that. What is the most cost-effective lifecycle policy configuration?

A. Move images to S3 Intelligent-Tiering after 30 days.

B. Move images to S3 Standard-IA after 30 days.

C. Move images to S3 Glacier Instant Retrieval after 30 days.

D. Delete images after 30 days.

Answer: B

S3 Standard-IA is ideal for infrequently accessed data while still providing low latency. It offers lower storage costs compared to S3 Standard.

Question 2

Your organization retains daily logs for 90 days for compliance but does not need them afterward. Which lifecycle rule should you configure?

A. Transition logs to S3 Glacier after 90 days.

B. Delete logs after 90 days.

C. Move logs to S3 Standard-IA after 90 days.

D. Transition logs to S3 Glacier Deep Archive after 90 days.

Answer: B

If the data is no longer required after 90 days, configuring an expiration rule to delete the logs is the most cost-effective option.

Question 3

You have unpredictable access patterns for your application data. Sometimes the data is accessed frequently, while at other times it is dormant. Which storage class is most suitable for this use case?

A. S3 Standard.

B. S3 Standard-IA.

C. S3 Intelligent-Tiering.

D. S3 Glacier Instant Retrieval.

Answer: C

S3 Intelligent-Tiering automatically moves data between access tiers based on usage patterns, optimizing costs for unpredictable access.

Question 4

You want to optimize the cost of storing data used for monthly reports. The data should be available in S3 Standard-IA after 60 days and deleted after one year. Which lifecycle configuration is appropriate?

A. Transition to S3 Standard-IA after 60 days and delete after 1 year.

B. Transition to S3 Glacier after 60 days and delete after 1 year.

C. Delete after 60 days.

D. Keep in S3 Standard for 1 year.

Answer: A

Transitioning to S3 Standard-IA reduces costs for infrequently accessed data, and expiring it after one year further optimizes storage.

Question 5

You enable lifecycle rules to manage a bucket where incomplete multipart uploads are frequent. How should you configure the rule to optimize costs?

A. Expire incomplete uploads after 1 day.

B. Transition incomplete uploads to S3 Glacier after 1 day.

C. Expire incomplete uploads after 7 days.

D. Delete incomplete uploads manually.

Answer: C

Expiring incomplete multipart uploads after a reasonable period (e.g., 7 days) prevents unnecessary storage costs without impacting ongoing uploads.