AWS Elastic File System (EFS) Guide for the AWS SAA Exam

This guide is tailored for the AWS SAA exam, offering detailed insights into EFS's functionalities and its application within the AWS ecosystem.

AWS Elastic File System (EFS) is a cloud-based file storage service that provides a simple, scalable, elastic file system for use with AWS Cloud services and on-premises resources. As businesses grow, so does the data they generate, necessitating a robust solution that can expand effortlessly without compromising performance or security. EFS is designed to address these needs, offering seamless scalability and high availability, making it an integral component of cloud infrastructure, particularly for applications that require shared access to file data.

Understanding AWS Elastic File System (EFS)

Definition and Role in AWS

AWS Elastic File System (EFS) can be compared to a magical bookshelf that not only stores an unlimited number of books but also allows you to access them from anywhere in the world. In technical terms, EFS provides a fully-managed file system that can be mounted on thousands of Amazon EC2 instances and is accessible from multiple AWS services. It is designed for high performance, easy scalability, and seamless integration with AWS compute services, making it ideal for applications that require a common file storage space such as content management systems, development environments, and big data analytics applications.

Comparison with Other AWS Storage Services

While EFS, Amazon S3 (Simple Storage Service), and Amazon EBS (Elastic Block Store) all provide storage solutions, they serve different needs and use cases:

- Amazon S3 is like a massive warehouse of data bins where each bin can store any amount of data but is accessed as a whole. This makes it perfect for data that isn't frequently changed, such as backups, media files, and archives.

- Amazon EBS, on the other hand, is like a traditional hard drive that is attached to your cloud server (EC2 instance). It is ideal for applications requiring frequent read/write operations with low latency, such as databases.

- AWS EFS provides a middle ground with the flexibility of cloud-based file storage that can be shared across multiple instances, resembling how network-attached storage (NAS) operates. This makes it uniquely suited for applications that require multiple instances to access the same data simultaneously.

Key Features of AWS EFS

Scalability

AWS Elastic File System (EFS) is designed with automatic scalability, meaning it can grow and shrink automatically as files are added or removed. This elasticity ensures that applications have access to storage capacity as needed without requiring manual intervention. The benefit of this feature is twofold: it optimizes performance, as the file system adapts to the current load, and it is cost-effective because you pay only for the storage you use.

Durability and Availability

AWS EFS is built to offer robust durability and high availability. Data is stored across multiple Availability Zones (AZs) automatically, which means that EFS replicates each file and directory in multiple physically separate locations within a region. This multi-AZ design not only ensures data durability and reliability—achieving a durability level of 99.999999999% (11 9's)—but also supports high availability. Even in the event of an AZ failure, EFS ensures that the data remains accessible, making it an excellent choice for critical applications requiring high uptime.

Security

Security in AWS EFS is comprehensive and integrates seamlessly with AWS's native security services. EFS supports encryption at rest using keys managed through AWS Key Management Service (KMS). Additionally, encryption in transit is available, ensuring that data is protected from unauthorized access as it moves between your EC2 instances and the EFS file systems. The integration with AWS IAM allows fine-grained control over who can access the EFS resources, enabling administrators to define policies that grant or deny actions on specific file systems. Furthermore, file-level and directory-level access can be managed via POSIX permissions, offering additional layers of security control.

Performance Modes

EFS offers two performance modes to cater to different application needs: General Purpose and Max I/O:

- General Purpose Performance Mode is the default mode and is optimized for latency-sensitive use cases where most of the file operations are metadata operations, such as opening, reading, writing, and closing files. This mode is well-suited for web serving, content management, and home directory scenarios.

- Max I/O Performance Mode is designed for applications that require higher levels of aggregate throughput and operations per second, albeit with slightly higher latencies. This mode is ideal for large-scale and data-heavy applications such as big data analysis, media processing, and scientific simulations.

Throughput Modes

EFS provides two throughput modes to manage the volume of data that can be transferred in and out of the file system: Bursting Throughput and Provisioned Throughput:

- Bursting Throughput Mode allows the throughput to scale with the amount of data stored in the file system. This mode uses a credit system where credits are accumulated at a baseline rate and are used when reading or writing data. It is suitable for workloads with variable read and write rates.

- Provisioned Throughput Mode is useful when the set baseline throughput limit of the Bursting Mode is insufficient for the application needs. In this mode, you can manually specify the throughput, independent of the amount of data stored, which can be crucial for applications that require a consistent and high level of throughput.

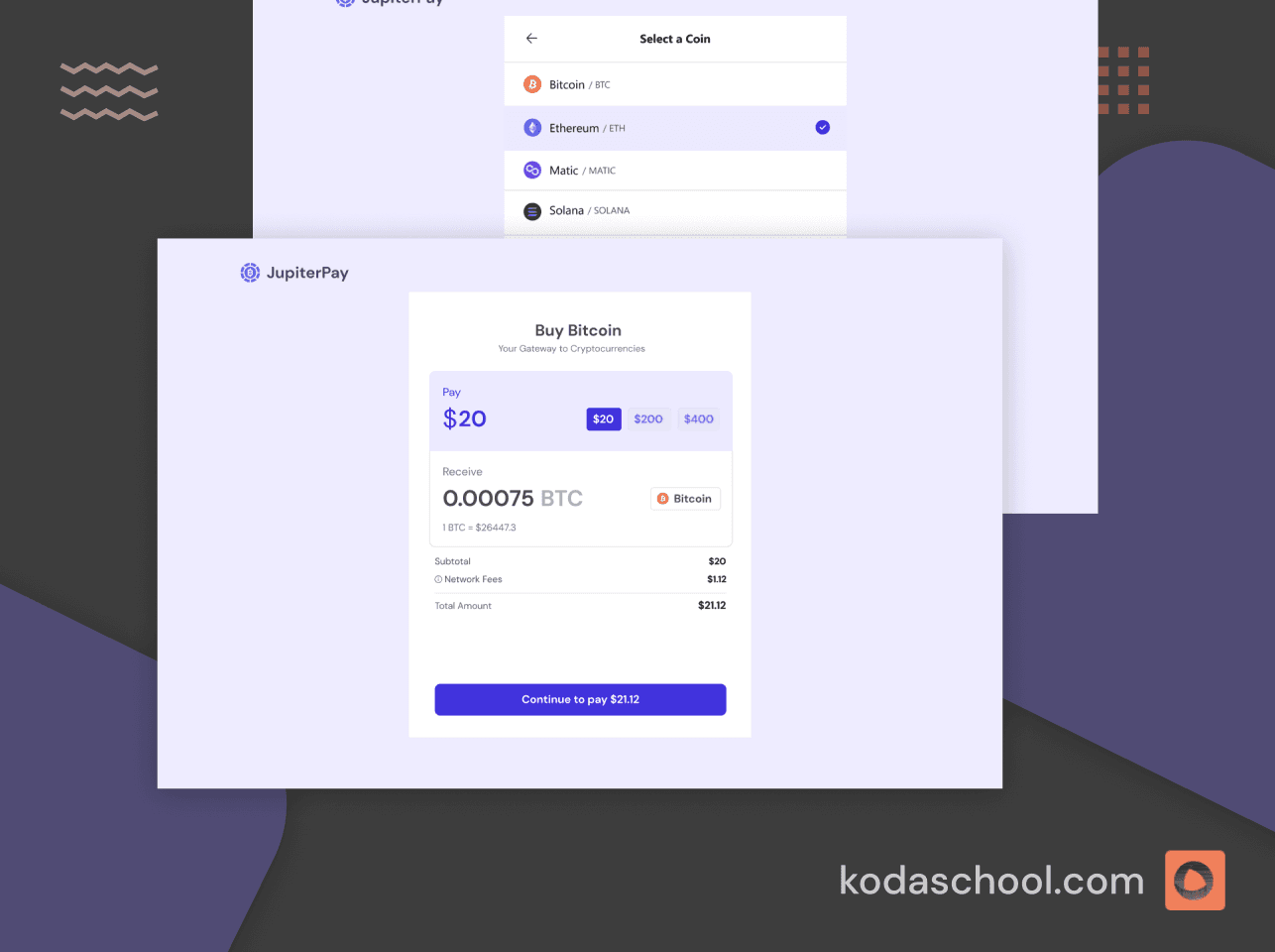

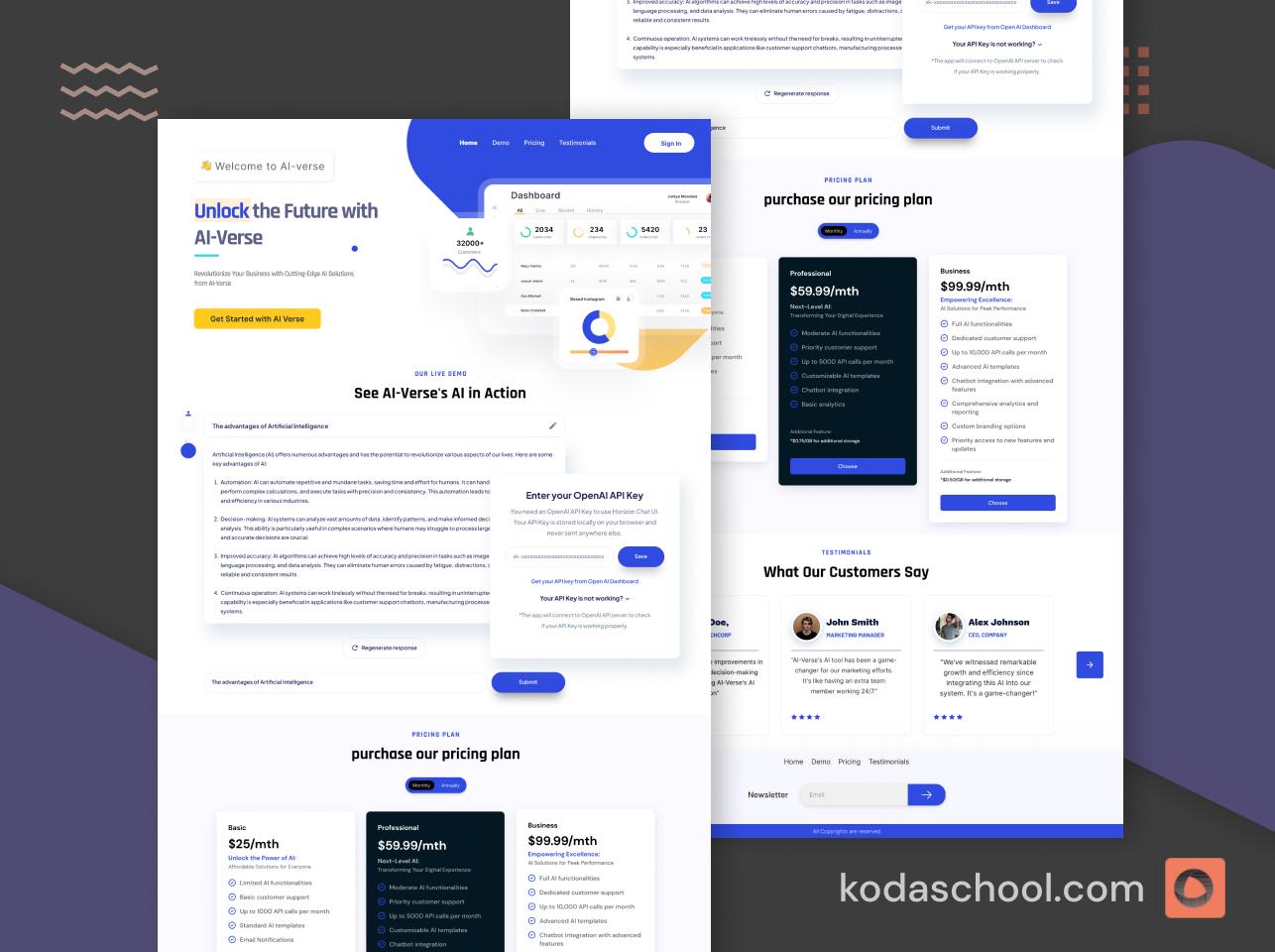

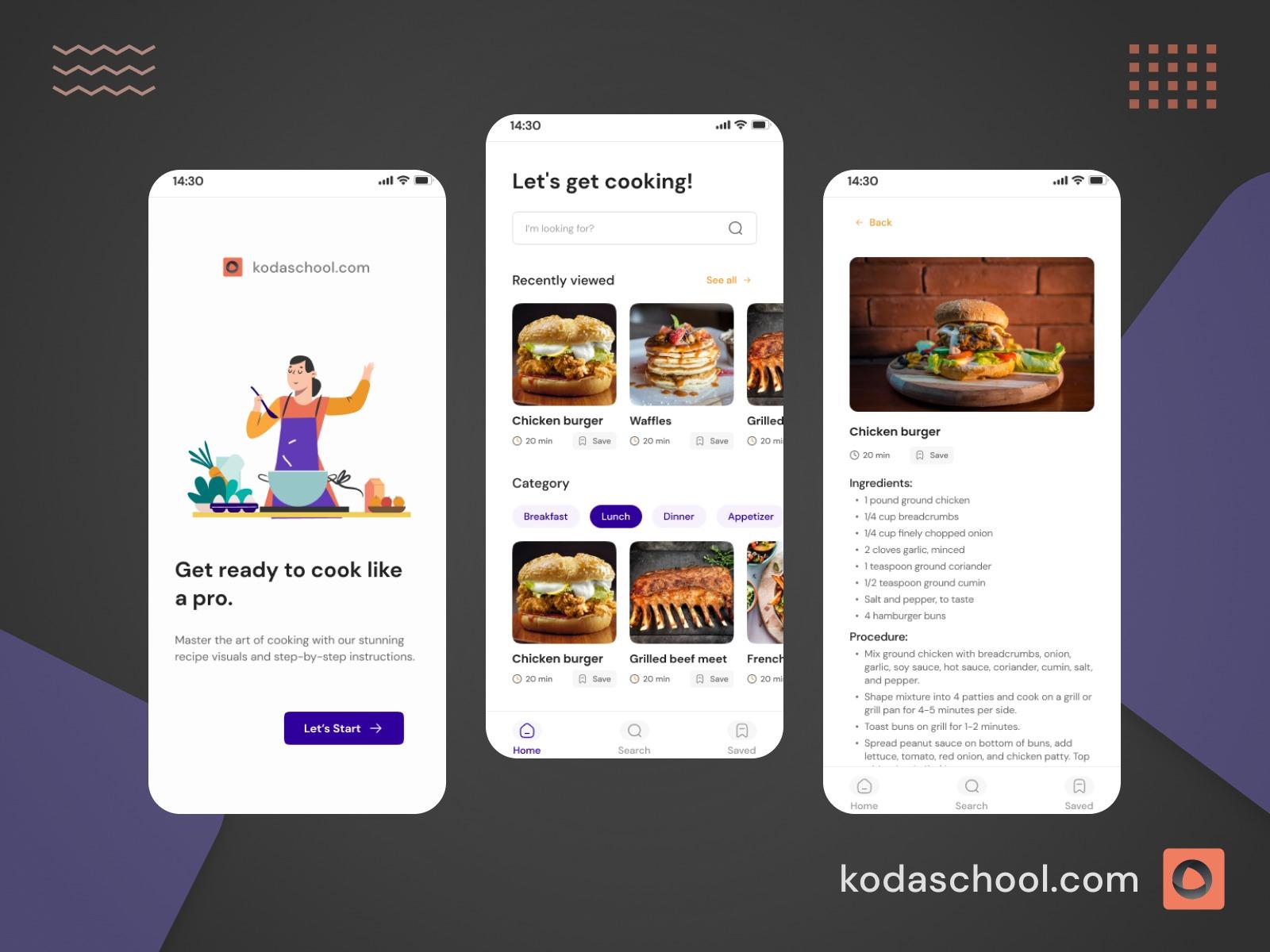

Try Kodaschool for free

Click below to sign up and get access to free web, android and iOs challenges.

Monitoring and Managing EFS

- Use AWS CloudWatch - Monitor metrics such as

TotalIOBytes,PermittedThroughput, andDataReadIOBytes. - Implement AWS CloudTrail -Track actions taken on EFS, including API calls.

- Use EFS File Sync - For efficient data transfer to EFS from on-premises or other AWS services.

- Regularly Review Security Settings - Check security groups and IAM roles to ensure compliance with your organization’s policies.

Use Cases

Big Data Analytics: EFS is ideal for handling large datasets required in big data analytics. It allows multiple instances to concurrently read and write data, providing the necessary performance for big data tools like Hadoop or Spark.

Web Serving: Websites with dynamic content that require frequent updates benefit from EFS because it allows web server clusters to access and serve the same data efficiently.

Content Management: For content management systems (CMS) that need to manage large volumes of content across various instances, EFS provides a centralized storage solution that ensures data consistency and reliability.

Application Hosting: EFS supports application hosting environments by offering shared file storage for applications that are scaled horizontally across multiple servers.

Case Study: A media company uses EFS to store and distribute large media files across their content delivery network. The scalable nature of EFS allows them to handle spikes in traffic during major events seamlessly.

Best Practices and Optimization Tips

Optimizing Costs and Performance

- Utilize Provisioned Throughput wisely: Opt for Provisioned Throughput during high-demand periods and switch back to Bursting Throughput as demand normalizes.

- Lifecycle Management: Implement lifecycle policies to move older data to infrequent access storage classes, reducing costs significantly.

Data Security and Compliance

- Regular Audits: Conduct regular security audits and compliance checks to ensure encryption settings and access controls meet policy standards.

- Use Dedicated Security Groups: Isolate file system traffic to dedicated security groups to tighten security and monitor traffic flows more effectively.

Sample Questions

Question 1

What must be considered when choosing between General Purpose and Max I/O performance modes for an EFS file system?

A) The cost of the service

B) The type of EC2 instances connected

C) The I/O characteristics of the application

D) The geographical location of the data centers

Answer: C

The choice between General Purpose and Max I/O performance modes depends on the I/O characteristics of the application. General Purpose is suited for latency-sensitive use cases, while Max I/O is designed for high-throughput, data-intensive operations.

Question 2

How does EFS handle concurrent writes from multiple clients to the same file?

A) It locks the file until the write operation is complete

B) It allows each client to write without any restrictions

C) It uses optimistic concurrency control

D) It queues write operations

Answer: C

EFS uses optimistic concurrency control, which allows multiple clients to write to the same file without conflicts by handling potential data inconsistencies that could arise from simultaneous writes.

Question 3

Which of the following is NOT a billing factor for Amazon EFS?

A) The number of file systems created

B) Data storage amount

C) Throughput mode

D) Data transfer out of Amazon EFS

Answer: A

The number of file systems created does not affect billing. Amazon EFS costs are based on the amount of data stored, the chosen throughput mode, and data transfer out of the service.

Question 4

What is a key advantage of using EFS Infrequent Access (IA) storage class?

A) Higher performance for live database hosting

B) Reduced costs for infrequently accessed data

C) Automatically scales with increased data input/output

D) It encrypts data using AWS KMS

Answer: B

The EFS IA storage class offers reduced costs for storing infrequently accessed data, making it cost-effective for data that does not require frequent access

Question 5

What AWS service can be integrated with EFS to automate backups?

A) AWS DataSync

B) Amazon S3

C) AWS Backup

D) Amazon CloudFront

Answer: C

AWS Backup can be integrated with EFS to automate the backup process, providing a simple and secure solution for creating, managing, and restoring backups