Auto Scaling Guide for the AWS Certified Solutions Architect Associate Exam

Auto Scaling dynamically adjusts compute resources based on demand, ensuring cost efficiency and optimal performance.

Auto scaling in AWS allows businesses and developers to adjust the computing resources they use automatically, based on the real-time demands of their applications. This capability ensures optimal performance and cost efficiency. In this article, we will explore which AWS services can be auto-scaled, the triggers for scaling, and how auto scaling adjusts instance counts.

AWS Services That Support Auto Scaling

- Amazon EC2: Auto Scaling for EC2 helps you ensure that you have the correct number of Amazon EC2 instances available to handle the load for your application.

- Amazon ECS: You can auto-scale your containerized services to meet demand, both in terms of the number of tasks and the ECS clusters themselves.

- Amazon RDS: The Auto Scaling feature in RDS enables automatic adjustment of database instances within a DB Cluster.

- Amazon DynamoDB: DynamoDB supports auto scaling for managing throughput capacity, automatically adjusting reads and writes.

- Amazon EMR: EMR clusters can auto-scale by adding or removing instances to optimize the processing of big data applications.

- Amazon S3: While S3 itself does not auto-scale, it works seamlessly with other auto-scaled services due to its inherently scalable nature.

Triggers for Scaling

Auto scaling is typically triggered by specific conditions defined in scaling policies. These triggers are based on various metrics that reflect the demand on resources. Common triggers include:

- CPU Utilization: A common metric for EC2 and ECS, where additional instances or tasks are launched if the CPU usage goes beyond a set threshold.

- Request Counts: Useful in load-balanced environments, scaling up when request counts exceed a certain number.

- Memory Usage: Important for memory-intensive applications, triggering scaling when memory thresholds are crossed.

- Custom Metrics: AWS also allows the creation of custom CloudWatch metrics to trigger scaling activities based on application-specific needs.

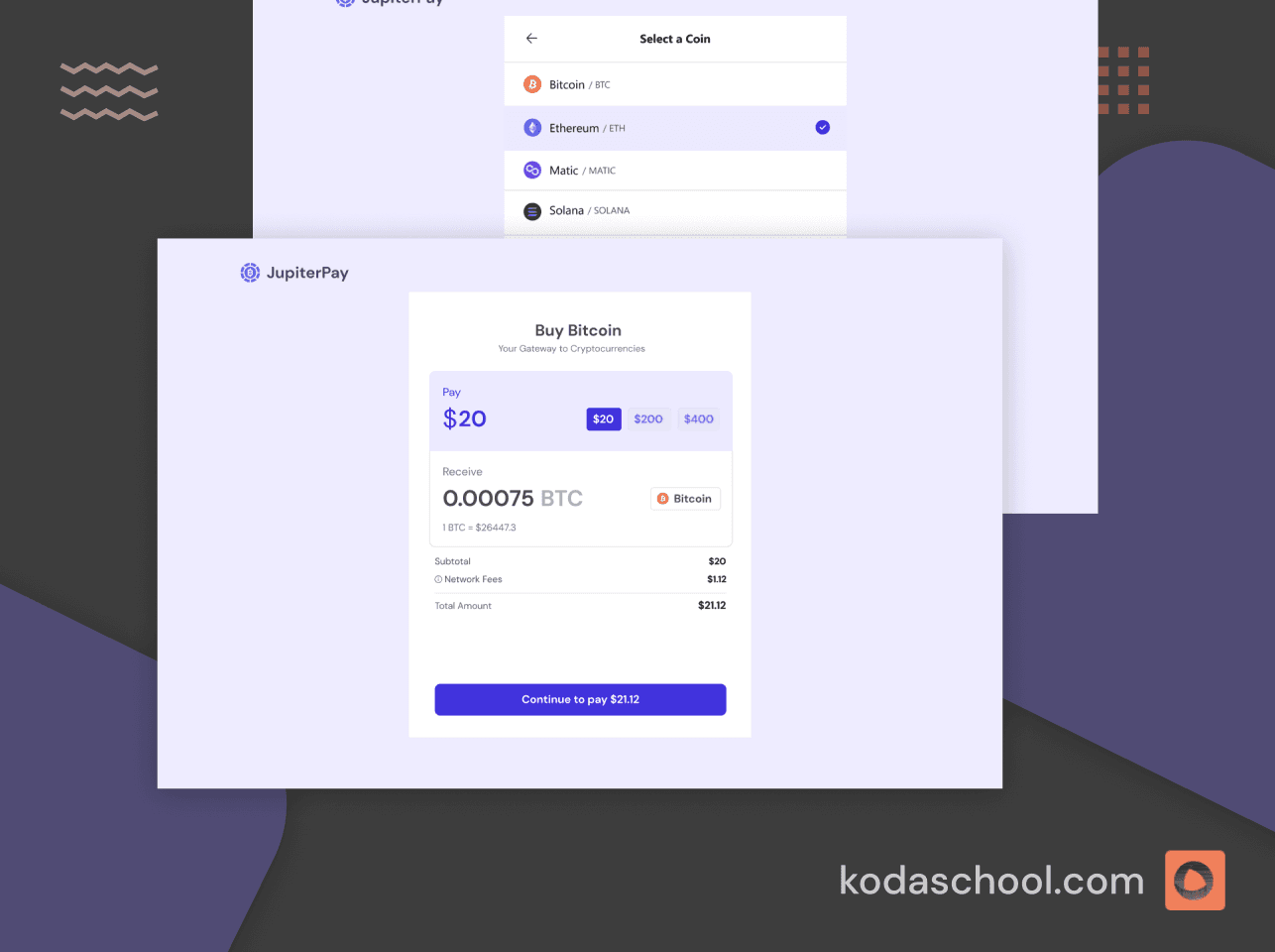

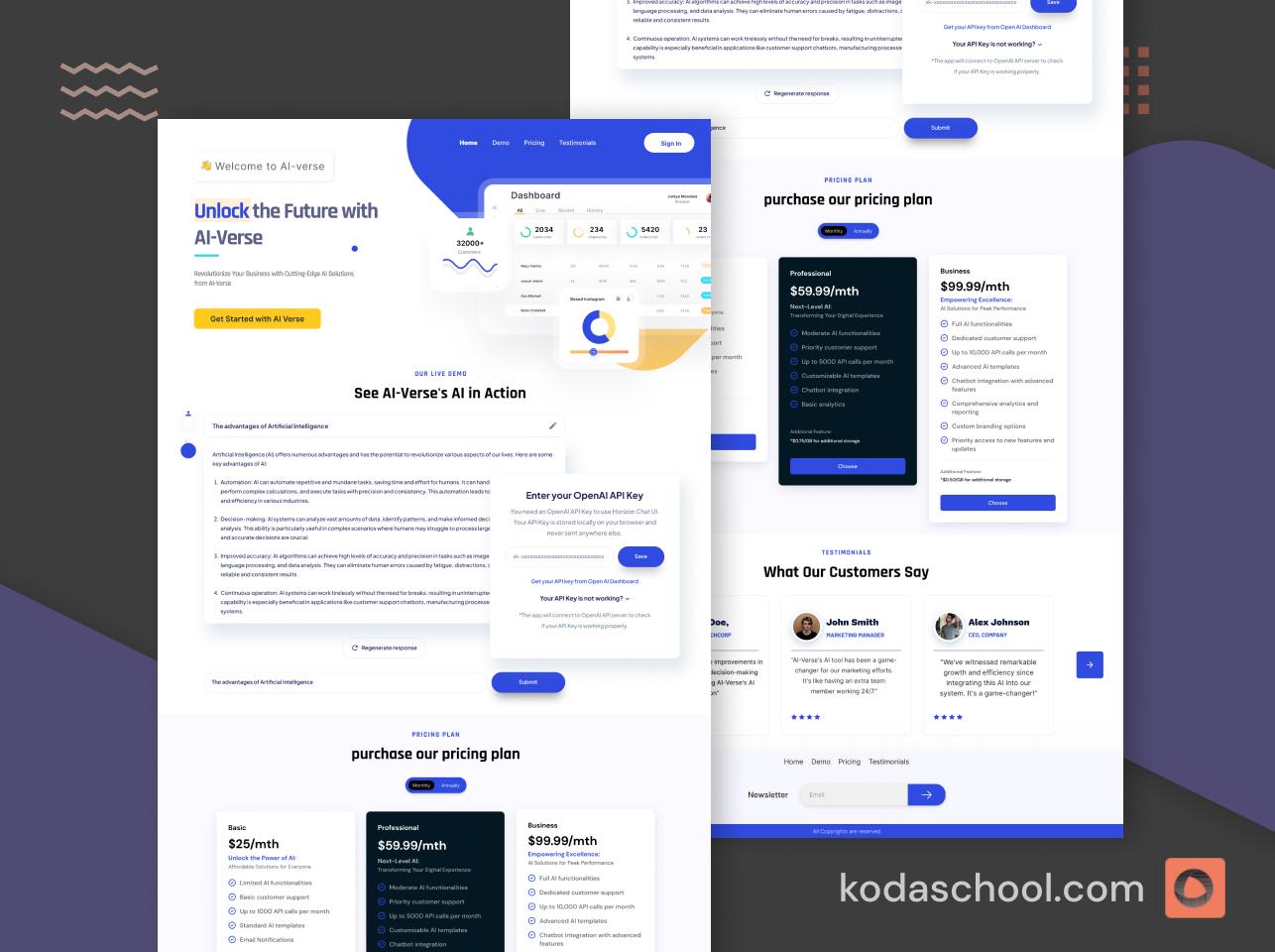

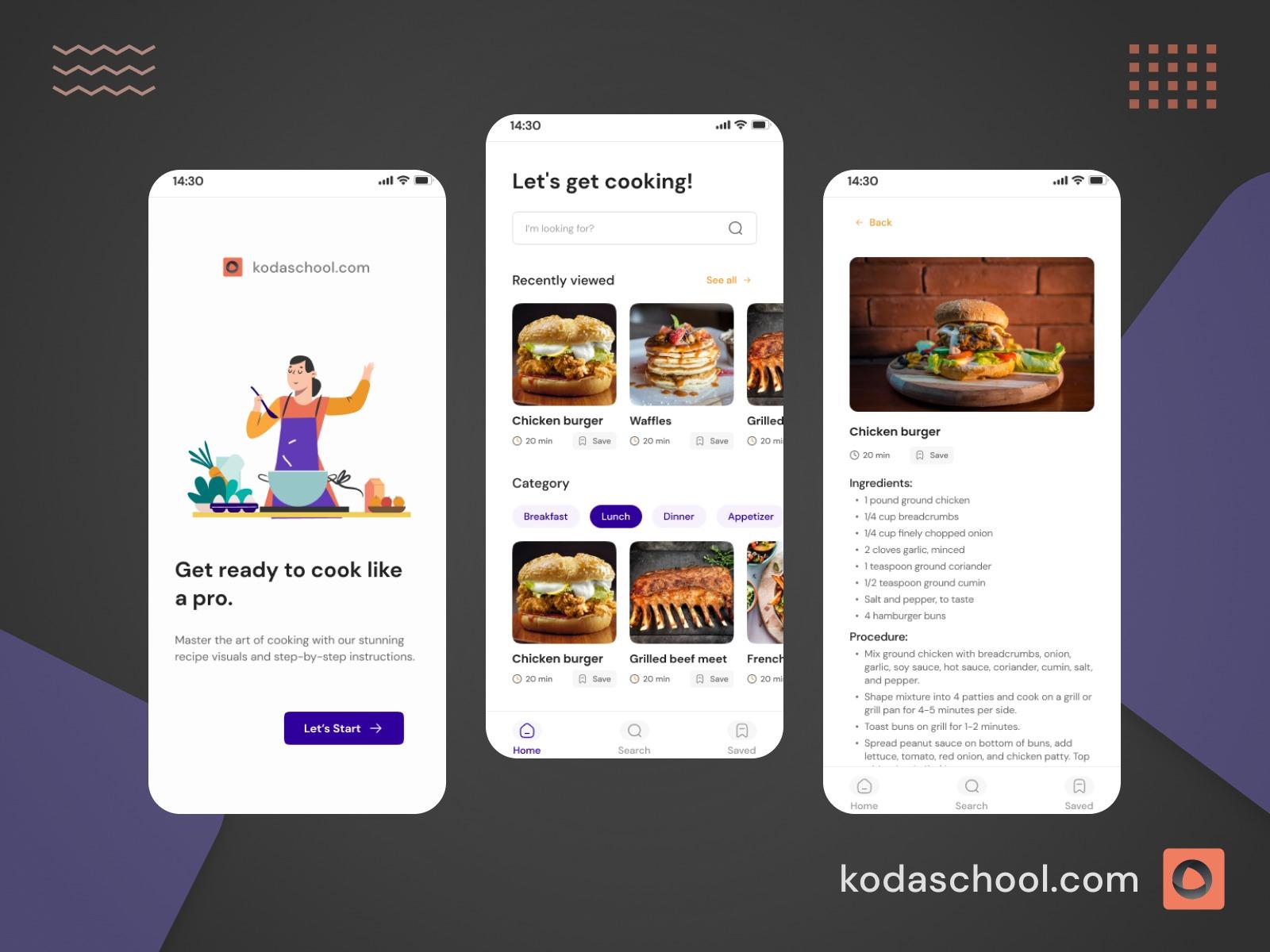

Try Kodaschool for free

Click below to sign up and get access to free web, android and iOs challenges.

Types of Scaling Plans in AWS Auto Scaling

AWS Auto Scaling supports various scaling plans, allowing users to adapt their resource management strategies to suit different scenarios and requirements. These scaling plans include:

1. Manual Scaling

- This is the simplest form of scaling where the administrator manually changes the number of instances or resources. It does not rely on any metrics or alarms.

- Manual scaling is suitable for predictable changes in load, such as those anticipated from planned events or deployments.

2. Scheduled Scaling

- Scheduled scaling allows you to scale your application in anticipation of known load changes. For instance, you can schedule scaling actions based on predictable load changes that occur daily, weekly, or monthly.

- Ideal for use cases like increasing capacity ahead of peak business hours or scaling down during off-peak times.

3. Dynamic Scaling

- Dynamic scaling responds to changing demand in real-time. It adjusts the capacity based on actual usage metrics, such as CPU utilization or network traffic.

- Dynamic scaling is crucial for applications with unpredictable workloads, ensuring they handle spikes without manual intervention.

4. Predictive Scaling

- Predictive scaling uses machine learning algorithms to predict future traffic patterns and schedules scaling actions in advance. It automatically learns the daily and weekly patterns of the application.

- Best for applications with variable but predictable traffic patterns, such as those influenced by user behavior or seasonal effects.

How Auto Scaling Adjusts Instance Counts

Auto scaling involves three main components:

- Launch Configurations/ Templates: These are blueprints that describe the configurations of instances that will be launched, such as instance type, AMI ID, and security groups.

- Auto Scaling Groups: These groups define the minimum and maximum number of instances, the desired capacity, and the scaling policies. The group ensures that the number of instances stays within defined parameters.

- Scaling Policies: These policies define the triggers for scaling actions. They can be simple (scale out/in at a fixed rate) or dynamic (scale based on demand). For manual and scheduled scaling, the triggers are predefined by the user. For dynamic and predictive scaling, the triggers are metric-based and forecast-driven, respectively.

Scaling Process

- Detection: A trigger (like CPU utilization) hits the threshold defined in the policy.

- Action: The auto scaling feature executes the action specified in the policy, such as launching or terminating instances.

- Balancing: After scaling, there’s a verification process to ensure that the new capacity meets the demand without over-provisioning.

Auto Scaling vs Load Balancing

Auto Scaling is primarily focused on ensuring that the number of active servers (instances) is in line with the need at any given moment to handle the traffic efficiently without unnecessary costs. Load balancing, on the other hand, is the process of distributing network or application traffic across multiple servers to ensure no single server bears too much demand. By spreading the load, load balancers improve responsiveness and increase the availability of applications.

How They Work Together

While Auto Scaling and Load Balancing are distinct, they often work in tandem within cloud environments to manage application scalability and performance:

- Auto Scaling ensures that the right number of instances is running according to the load. As demand increases, Auto Scaling adds more instances to the pool to handle the load, and as it decreases, it removes excess instances.

- Load Balancing takes the traffic and distributes it across all available instances within the auto scaling group. Even as the number of instances changes, the load balancer seamlessly reroutes traffic to ensure even distribution across all active instances.

Conclusion

Auto scaling in AWS provides a flexible and powerful way to manage application resources dynamically. By effectively using auto scaling, organizations can ensure their applications are running at optimum performance levels without incurring unnecessary costs. It's a crucial component of cloud resource management that helps in maintaining application reliability and service availability.