Amazon Redshift for the AWS Solutions Architect Associate Exam

Unlock the potential of Amazon Redshift with our comprehensive guide. Learn about its architecture, key features and use cases for implementing high-performance data warehousing solutions.

Overview of Amazon Redshift

What is Amazon Redshift?

Amazon Redshift is a fast, scalable data warehouse service that makes it simple and cost-effective to analyze all your data using standard SQL and your existing BI tools. Redshift allows you to run complex queries against petabytes of structured data, with the ability to scale from a few hundred gigabytes to a petabyte or more.

Key Features:

- Scalability: Easily scale your Redshift cluster by adding or removing nodes as your data and query requirements change.

- Performance: Optimized for high performance with columnar storage, data compression, and zone maps to reduce the amount of data scanned.

- Cost-Effective: Pay only for the resources you use, with options for on-demand or reserved instances to save costs.

- Integration: Seamlessly integrates with AWS services such as S3, RDS, DynamoDB, and EMR, as well as third-party tools.

Redshift Architecture

Cluster and Nodes:

- Cluster: A Redshift data warehouse is a collection of nodes, called a cluster. Each cluster runs a Redshift engine and contains one or more databases.

- Nodes: A node is a single instance within a Redshift cluster. Nodes are divided into Leader Nodes and Compute Nodes.

- Leader Node: Manages the coordination of all the queries and data distribution among the compute nodes.

- Compute Nodes: Store data and execute queries and computations as instructed by the leader node.

Node Types:

- Dense Compute (DC) Nodes: Use SSDs for storage, optimized for performance.

- Dense Storage (DS) Nodes: Use HDDs for storage, optimized for cost.

Data Distribution:

- Data is distributed across compute nodes using distribution styles (KEY, EVEN, ALL) to optimize query performance and storage efficiency.

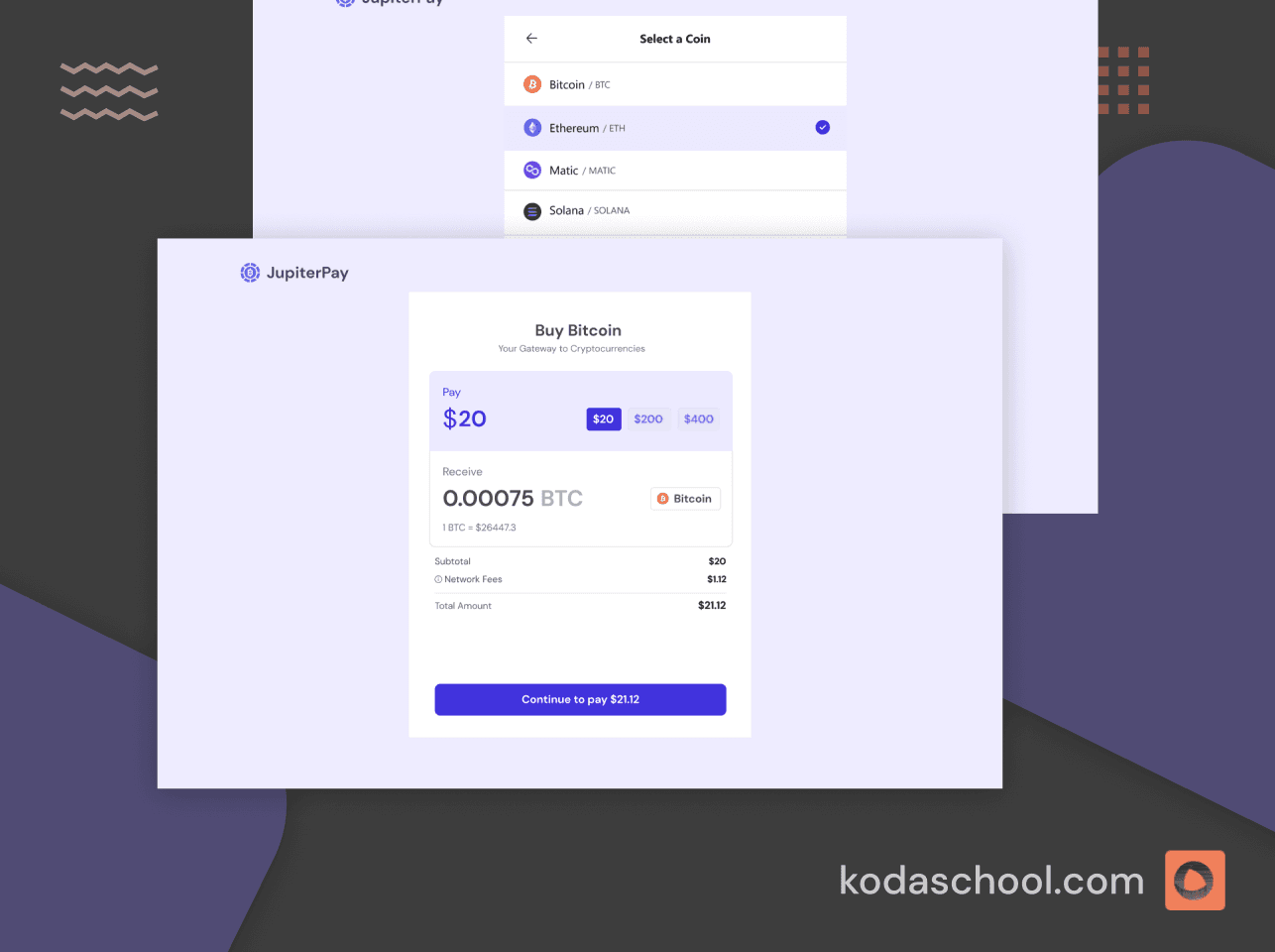

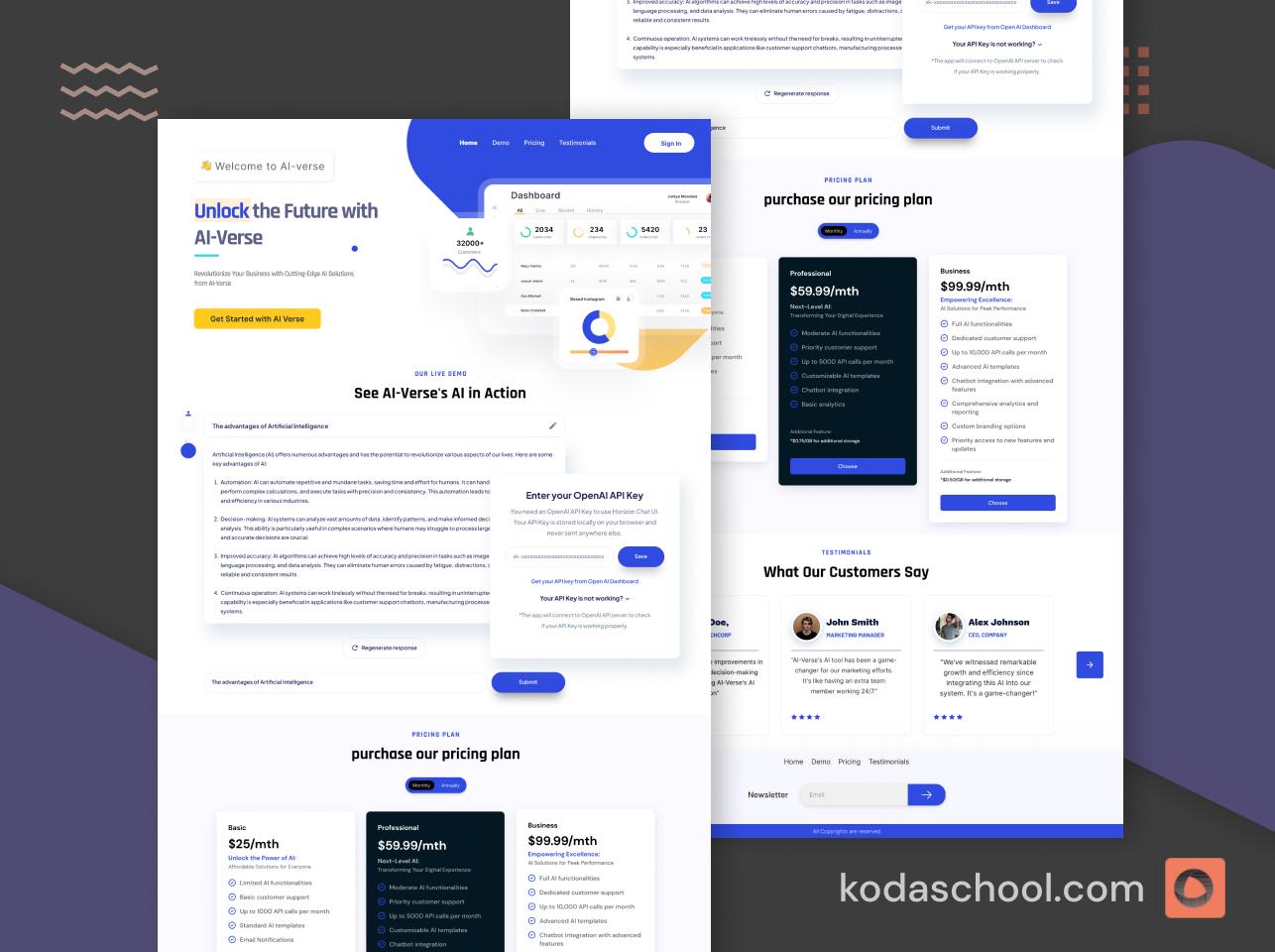

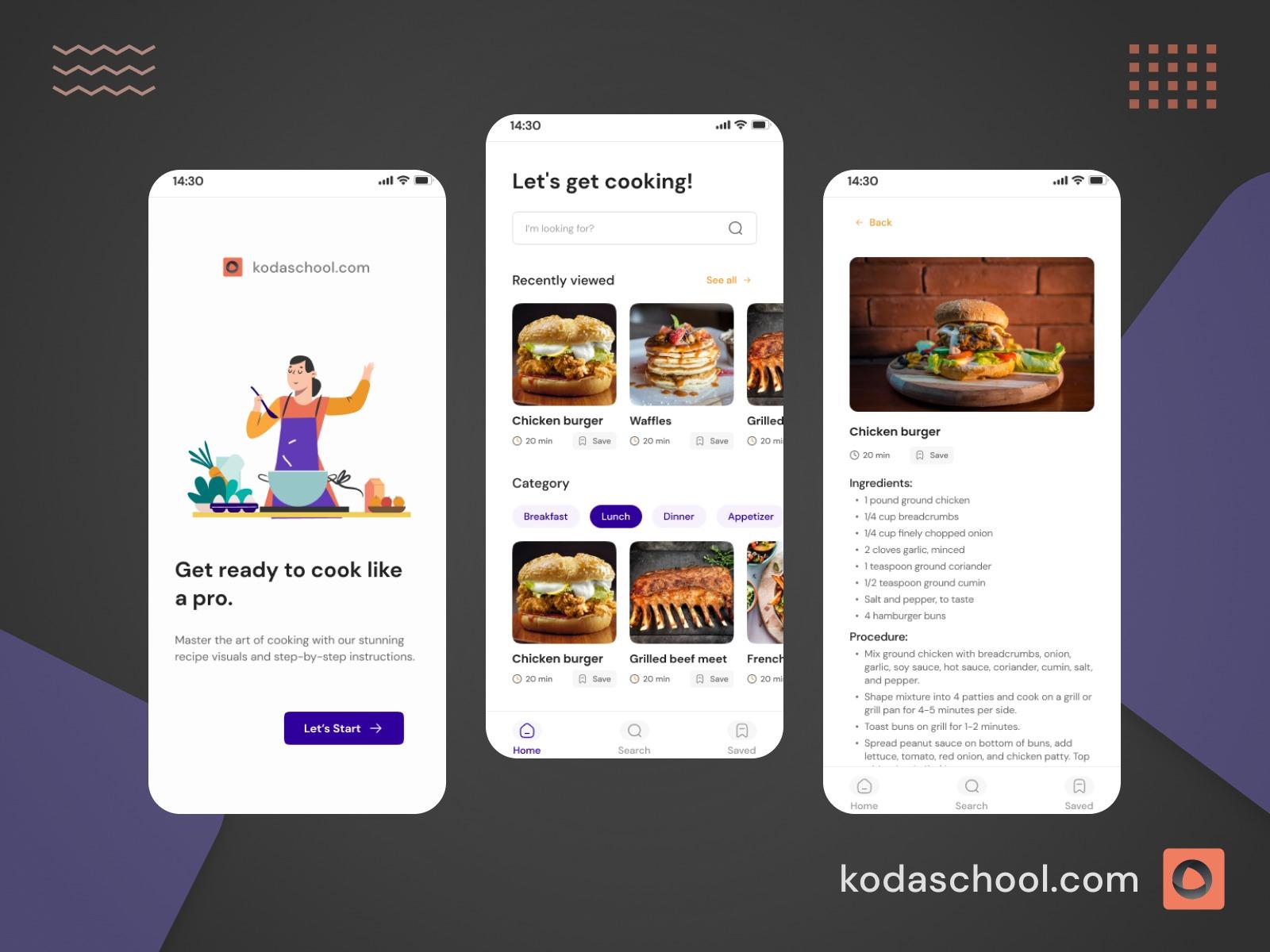

Try Kodaschool for free

Click below to sign up and get access to free web, android and iOs challenges.

Key Concepts and Components

Columnar Storage:

- Redshift stores data in a columnar format, which significantly reduces the amount of I/O required to perform queries, enhancing performance.

Data Compression:

- Data is compressed to reduce storage costs and improve query performance. Redshift automatically selects the best compression scheme for each column.

Massively Parallel Processing (MPP):

- Redshift uses MPP to distribute data and query load across multiple nodes, allowing for fast execution of complex queries.

SQL Interface:

- Redshift uses PostgreSQL, providing a familiar SQL interface for querying and managing data.

Redshift Spectrum:

- Allows you to run queries against exabytes of data in S3 without having to load the data into Redshift tables.

Data Loading and Unloading

Loading Data:

- Data can be loaded into Redshift from various sources, including Amazon S3, DynamoDB, EMR, RDS, and on-premises databases using AWS Data Pipeline, AWS Glue, or third-party ETL tools.

- COPY Command: The primary method for loading data into Redshift from flat files stored in S3 or DynamoDB tables.

Unloading Data:

- UNLOAD Command: Allows you to export data from Redshift tables back to S3 in a parallel and efficient manner.

Performance Optimization

Best Practices:

- Distribution Keys and Sort Keys: Choosing the right distribution and sort keys can significantly improve query performance by optimizing data distribution and reducing I/O.

- Workload Management (WLM): Configure WLM to manage query queues and priorities, ensuring efficient use of resources.

- Compression and Encoding: Use optimal compression and encoding techniques to reduce storage requirements and improve performance.

- Analyzing and Vacuuming: Regularly analyze and vacuum tables to maintain statistics and reclaim storage space.

Security and Compliance

Encryption:

- Data in Redshift can be encrypted at rest and in transit using AWS Key Management Service (KMS) or customer-managed keys.

Access Control:

- Redshift integrates with AWS Identity and Access Management (IAM) for user authentication and authorization.

- Support for network isolation using VPC, security groups, and enhanced VPC routing.

Compliance:

- Redshift complies with various regulatory standards, including SOC 1, SOC 2, SOC 3, PCI DSS, HIPAA, and GDPR, ensuring data security and privacy.

Monitoring and Maintenance

Monitoring:

- Use Amazon CloudWatch to monitor cluster performance metrics such as CPU utilization, disk space, and query performance.

- Redshift Console provides insights into query performance, system performance, and database health.

Maintenance:

- Regularly update the Redshift engine to benefit from the latest features and performance improvements.

- Perform routine maintenance tasks such as backing up data, analyzing, and vacuuming tables.

Use Cases

Data Warehousing:

- Ideal for large-scale data warehousing solutions, supporting complex queries and large datasets.

Business Intelligence:

- Integrates seamlessly with BI tools like Tableau, Looker, and Amazon QuickSight for advanced data analysis and visualization.

Log and Event Analysis:

- Efficiently store and analyze logs and event data for monitoring and troubleshooting applications.

Machine Learning:

- Use Redshift as a data source for machine learning models, leveraging integrated services like Amazon SageMaker.

Conclusion

Amazon Redshift is a powerful and flexible data warehouse solution that enables organizations to efficiently store, query, and analyze large volumes of data. By understanding its architecture, key features, and best practices, businesses can leverage Redshift to gain valuable insights and drive data-driven decision-making. Whether you are looking to implement a data warehouse, enhance your BI capabilities, or analyze logs and events, Amazon Redshift offers the scalability, performance, and integration needed to meet your data analytics requirements.

Sample Questions

Question 1

Which Amazon Redshift feature allows you to run queries against exabytes of data stored in Amazon S3 without having to load the data into Redshift tables?

A. Redshift Spectrum

B. Amazon Athena

C. AWS Glue

D. Redshift Data Sharing

Answer: A.

Redshift Spectrum allows you to extend the analytic capabilities of Redshift beyond the data stored in your local Redshift tables to data stored in S3. This enables you to query large datasets stored in S3 without having to load them into Redshift.

Question 2

What is the primary method for loading data into Amazon Redshift from flat files stored in Amazon S3?

A. INSERT Command

B. COPY Command

C. LOAD Command

D. IMPORT Command

Answer: B

The COPY command is the most efficient way to load large datasets into Amazon Redshift from flat files stored in Amazon S3, DynamoDB tables, or other sources. It is optimized for performance and can load data in parallel.

Question 3

Which type of Redshift node should you choose if your primary requirement is high performance with SSD storage?

A. Dense Compute (DC) Nodes

B. Dense Storage (DS) Nodes

C. General Purpose (GP) Nodes

D. High I/O (HI) Nodes

Answer: A

Dense Compute (DC) nodes use SSD storage and are optimized for high-performance data warehousing. They are suitable for workloads that require fast query performance and low-latency access to data.

Question 4

Which AWS service can be used to monitor the performance metrics of an Amazon Redshift cluster?

A. Amazon CloudWatch

B. AWS CloudTrail

C. AWS Config

D. Amazon QuickSight

Answer: A

Amazon CloudWatch is used to monitor the performance metrics of an Amazon Redshift cluster. It provides detailed insights into metrics such as CPU utilization, disk space usage, and query performance.

Question 5

What is the purpose of defining distribution keys and sort keys in Amazon Redshift?

A. To manage user authentication and authorization

B. To optimize data compression and encoding

C. To control network access to the cluster

D. To optimize query performance and data distribution

Answer: D

Distribution keys and sort keys are used to optimize query performance and data distribution in Amazon Redshift. Distribution keys determine how data is distributed across nodes, while sort keys define the order in which data is stored within each table, helping to improve the efficiency of query execution.